Tuning HTTP Keep-Alive in Node.js

We suffered some difficult to track down ECONNRESET errors in our Node.js proxy service until we learned a lot about HTTP persistent connections and Node.js HTTP defaults.

We suffered some difficult to track down ECONNRESET errors in our Node.js proxy service until we learned a lot about HTTP persistent connections and Node.js HTTP defaults.

By default, HTTP creates a new TCP connection for every request. HTTP keep-alive allows HTTP clients to re-use connections for multiple requests, and relies on timeout configurations on both the client and target server to decide when to close open TCP sockets.

In Node.js clients, you can use a module like agentkeepalive to tell your HTTP/HTTPS clients to use persistent HTTP connections.

In ConnectReport, we have a proxy service that acts as the gateway to all requests across our different services, with targets including our management API and our core server. We utilize agentkeepalive in our proxy service to reduce latency in connections from the proxy to the targets, but have periodically experienced ECONNRESET errors thrown by our proxy service. The ECONNRESET errors were fairly rare, occurring in about 1/1000 requests. Reproducing these elusive errors was nearly impossible, and we only really understood how they were being thrown once we did a deep dive into how HTTP persistent connections work.

HTTP persistent connections in a nutshell

Under the hood, HTTP persistent connections utilize OS level sockets. In general, clients will configure a limited number of sockets and a connection timeout. If nothing gets sent down the socket for the duration of the timeout, the socket for the connection is closed. On the other end of the client is the target server that the connection is calling, and the target server may have its own keep alive timeout. In Node.js, the keep alive timeout is 5 seconds by default. Node.js also has a headers timeout (which should be ~1s greater than the keep alive timeout), which contributes to the persistent connection timeout behavior.

502 Errors and their sources in your application

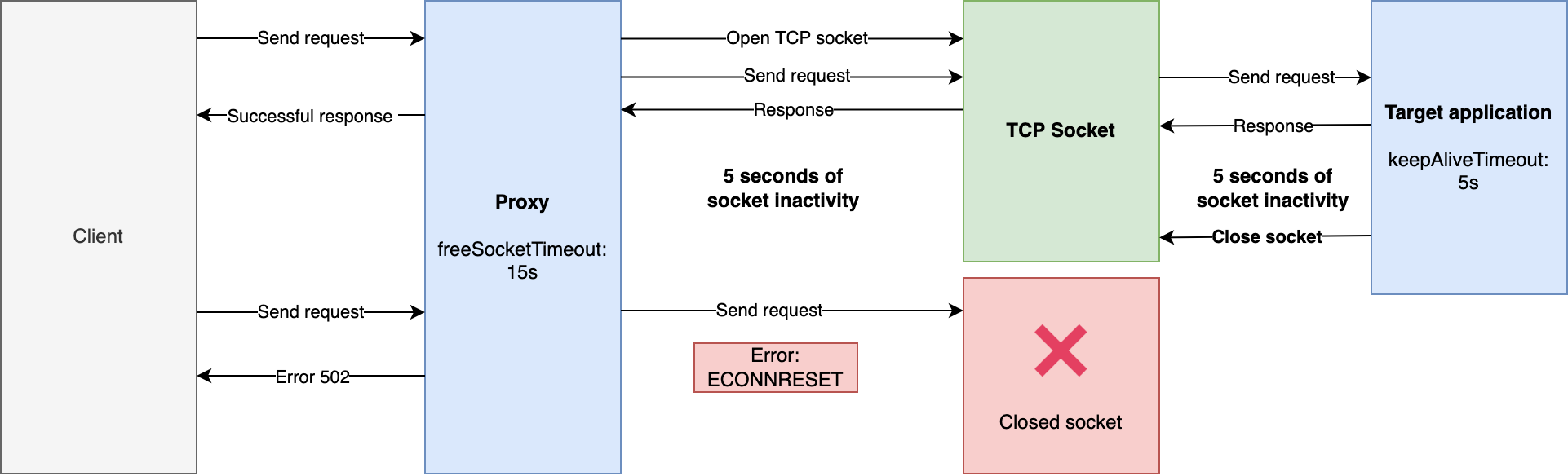

What happens in the real world (and for our product) is that the default timeout of the widely used agentkeepalive was 15 seconds – much longer than the Node.js timeout of 5 seconds. How does this look in practice?

What's happens in the above:

- Client requests a resource from the proxy

- Proxy opens a reusable TCP socket to the target application with a 15 second timeout

- Request is sent down the socket to the target application, and a successful response comes back

- More than 5 seconds later, no requests have hit the target application, so the application closes the socket.

- The proxy receives another request and believes it has an open connection that it can use. It re-uses a reference to the same TCP socket as before, but the socket on the end of the target application is actually closed.

- The proxy sends the requests down a dead socket and Node.js throws an

ECONNRESET - The proxy responds to the client with a

502 Bad Gatewayerror.

Getting around ECONNRESET

The solution to the dead socket issue is for your client to have a shorter socket timeout than the target. If your target has a 5 second timeout, and your client has a 4 second timeout, the client will never try to send a request down a dead socket. If your client has a larger timeout than the target, at some point, the client will think it has an open socket that is actually dead.

In response to reports on this issue, agentkeepalive maintainers have smartly (and finally) decided to set the default freeSocketTimeout to 4 seconds in version 4.2.0 of agentkeepalive, released on December 30th 2020.

If you're looking to use longer timeouts, or you're using a version of agentkeepalive earlier than 4.2.0, you can edit your Node.js app default timeouts to be larger as follows:

const express = require("express");

const http = require("http");

const app = express();

const server = http.createServer({}, app).listen(3000);

// This is the important stuff

server.keepAliveTimeout = (60 * 1000) + 1000;

server.headersTimeout = (60 * 1000) + 2000;

Notes on AWS ELB 502's with Node.js servers

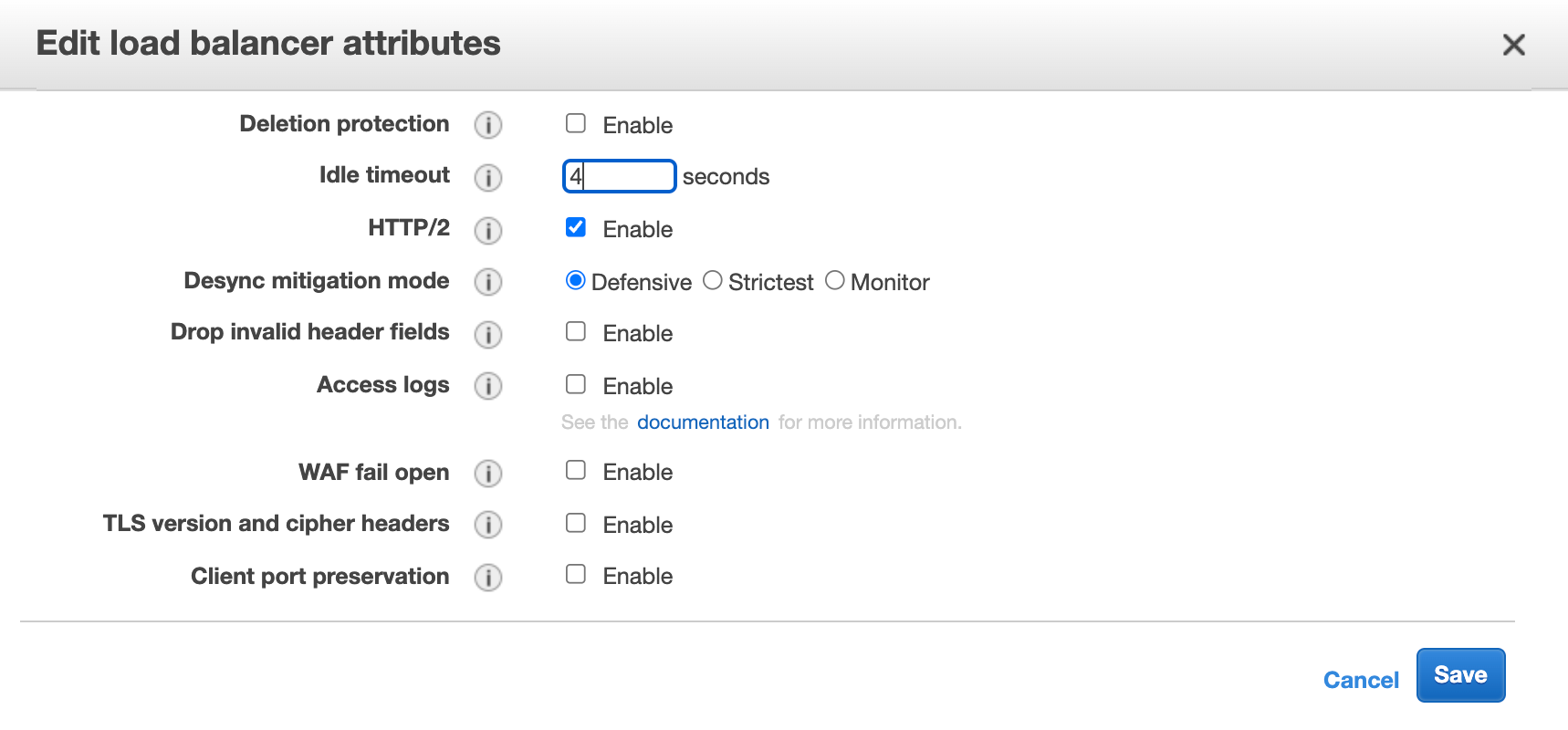

If you're using ELB to point traffic at your Node.js apps as we are, you should know that is has a default keep alive timeout of 60s, which is greater than the Node.js default of 5s, which will cause your ELB to have intermittent 502 errors.

You should edit your load balancer "Idle timeout" attribute to 4 seconds to be compatible with Node.js defaults.

Alternatively, you can edit the timeouts on your target Node.js app as indicated in the previous section.